1、安装TensorFlow

1、安装

建议安装1.12.0版本

1 | pip install tensorflow==1.12.0 |

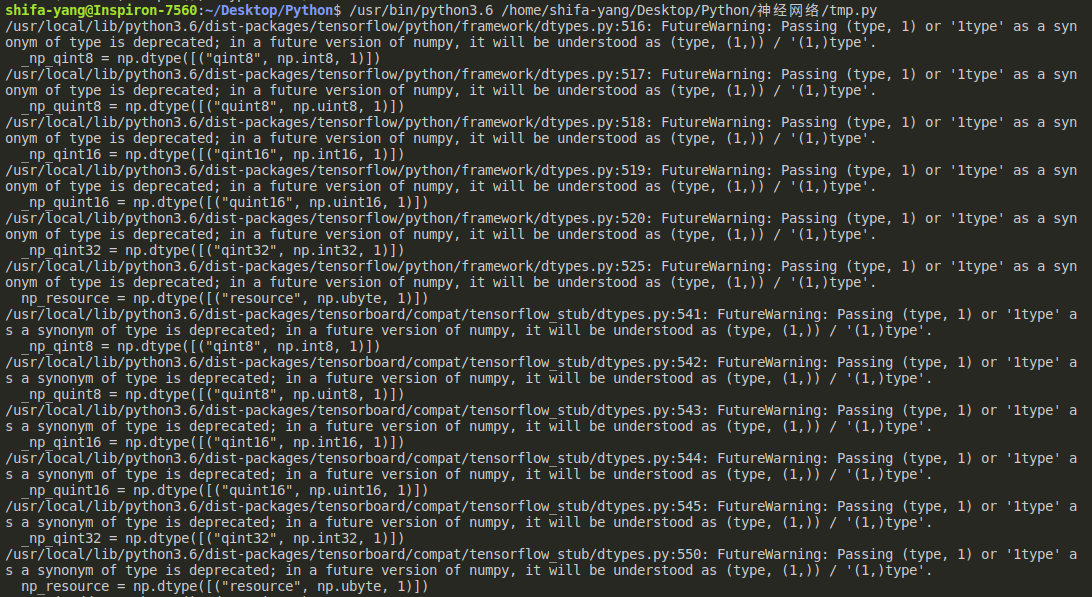

2、TensorFlow与NumPy版本不兼容

1.18.0版本的NumPy与TensorFlow不兼容,改用1.14.0版本的NumPy

安装1.14.0版本的Numpy

1 | pip install numpy==1.14.0 |

3、取消警告提示

TensorFlow在运行时会提示警告,将下列语句添加到程序文件中来取消提示。

1 | import os |

2、基础

1、简单程序 a+b

1 | import tensorflow as tf |

2、查看默认图

1 | import tensorflow as tf |

3、自定义图

1 | import tensorflow as tf |

4、图的可视化

1 | import tensorflow as tf |

运行程序会在./神经网络/TensorFlow教程/06图的可视化/目录下生成event文件,在终端执行如下命令:

1 | tensorboard --logdir='./神经网络/TensorFlow教程/06图的可视化/' |

5、会话Session

1 | import tensorflow as tf |

6、feed_dict的使用

1 | import tensorflow as tf |

7、创建张量

1 | import tensorflow as tf |

8、改变张量形状

1 | import tensorflow as tf |

9、矩阵运算

1 | import tensorflow as tf |

3、案例

1、线性回归模型

1、线性回归原理

1、构造模型

$ y = w_1x_1 + w_2x_2 + … + w_n*x_n + b $

2、损失函数

均方误差

3、优化损失

梯度下降

2、设计方案

1、准备数据

随机100个点,只有一个特征,x和y之间满足关系$ y = kx + b$

X.shape = (100, 1)

y.shape = (100, 1)

数据分布满足 $y = 0.8x + 0.7$

y_predict = tf.matual(X, weight) + bias

2、构造损失函数

error = tf.reduce_mean(tf.square(y_predicty-y))

3、优化损失

tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error)

3、实现

1 | import tensorflow as tf |

4、添加变量显示

1 | import tensorflow as tf |

5、增加命名空间

1 | import tensorflow as tf |

6、保存模型

1 | import tensorflow as tf |

7、读取模型

1 | import tensorflow as tf |

2、手写数字识别

1、数据准备

1 | from tensorflow.examples.tutorials.mnist import input_data |

2、实现

1 | from tensorflow.examples.tutorials.mnist import input_data |